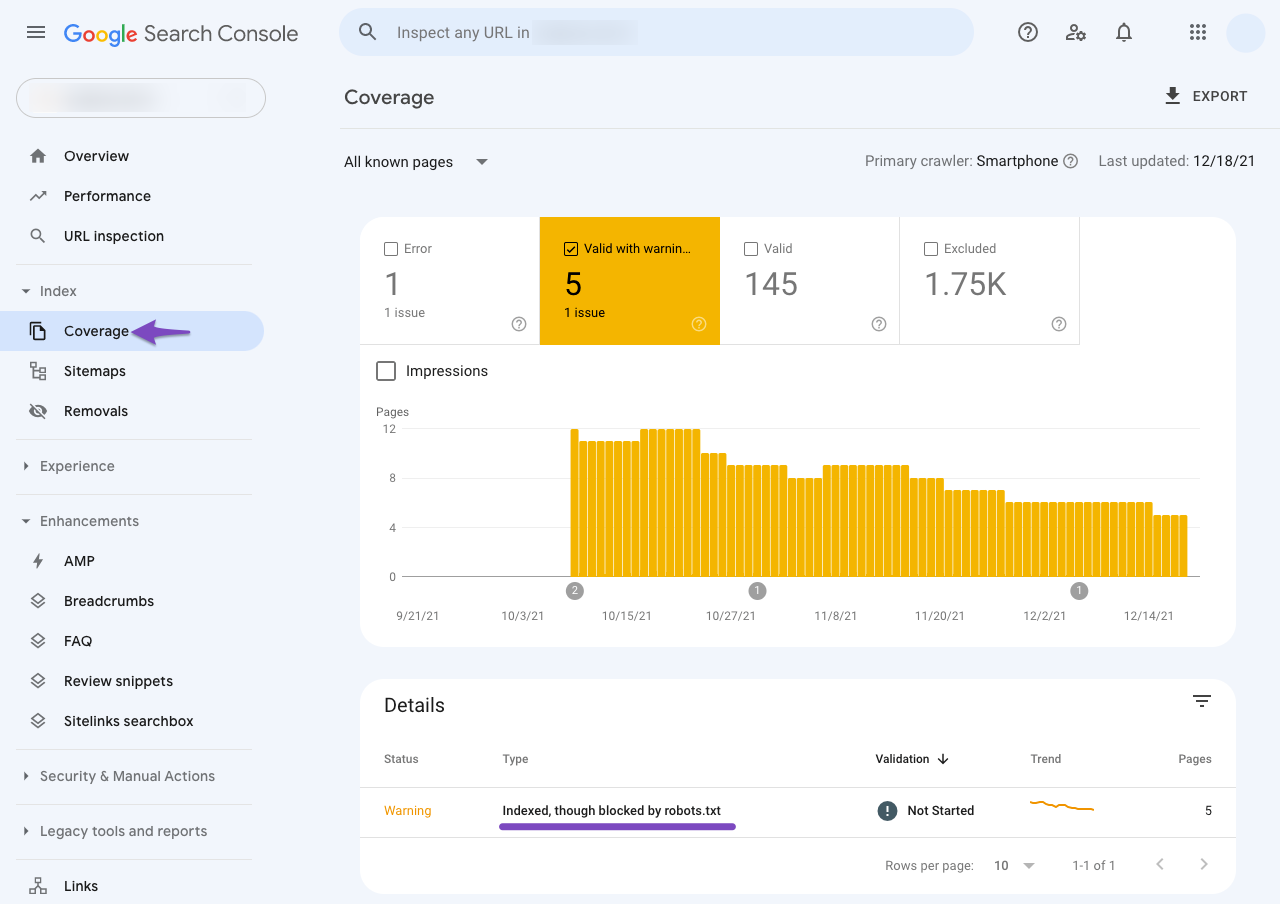

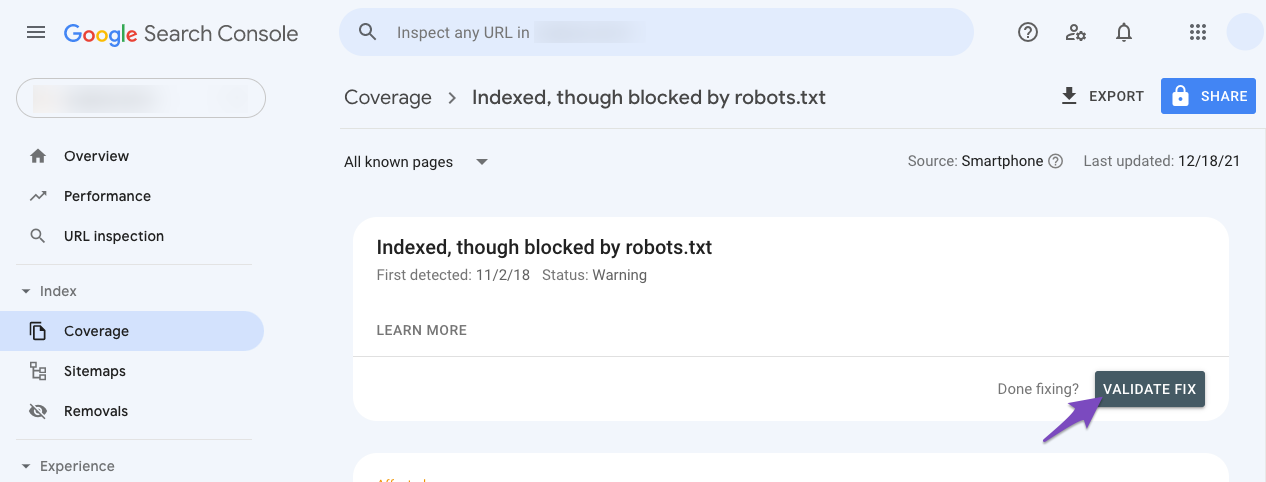

If you’ve received an email alert or happen to notice the warning ‘Indexed, though blocked by robots.txt in your Google Search Console as shown below, then in this knowledgebase article, we’ll show you how you can troubleshoot the warning and fix it.

Table of Contents

1 What Does the Error ‘Indexed, Though Blocked by Robots.txt’ Mean?

The error simply means,

- Google has found your page and indexed in search results.

- But then, it has also found a rule in robots.txt that instructs to ignore the page from crawling.

Now that Google is confused about whether to index the page or not, it simply throws a warning in Google Search Console. So that you can look into this and choose a plan of action.

When you’ve blocked the page with the intention to prevent the page from getting indexed, you need to be aware that — although Google respects the robots.txt in most cases, that alone cannot prevent the page from getting indexed. There could be a multitude of reasons, like an external site linking to your blocked page and eventually leading Google to index the page with the little information available.

On the other hand, if the page is supposed to be indexed, but accidentally got blocked by robots.txt, then you should unblock the page from robots.txt to ensure Google’s crawlers are able to access the page.

Now you get the basic ideology behind this warning, the practical causes behind this could be plenty considering the CMS and the technical implementation. Hence, we would go through a comprehensive way to debug and fix this warning in this article.

2 Export the Report from Google Search Console

For small websites, you might have only a handful of URLs under this warning. But most complex websites and eCommerce sites, are bound to have hundreds or even thousands of URLs. While it is not feasible to use GSC to go through all the links, you can export the report from Google Search Console to open it with a spreadsheet editor.

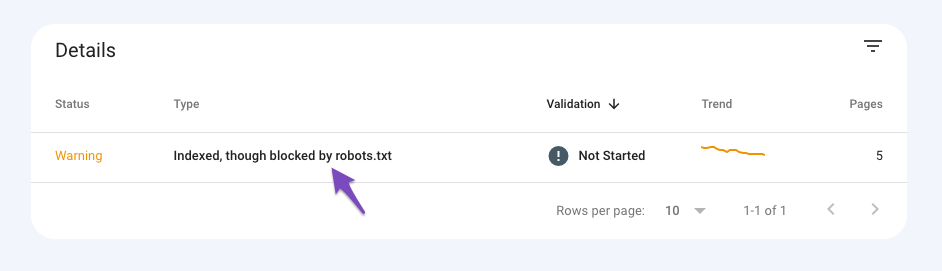

To export, simply click the warning that would be available under Google Search Console Dashboard → Coverage → Valid With Warnings.

And on the next page, you’ll be able to export all the URLs pertaining to this warning by clicking the Export option available in the top-right corner. From the list of export options, you can choose to download and open the file with a spreadsheet editor of your choice.

Now that you’ve exported the URLs, the very first thing you should figure out from looking at these URLs is – whether the page should be indexed or not indexed. And the course of action would only depend on your answer.

3 Pages to Be Indexed

If you figure out the page is supposed to be Indexed, then you should test your robots.txt and identify if there is any rule preventing the page from being crawled by Googlebot.

To debug your robots.txt file, you can follow the exact steps discussed below.

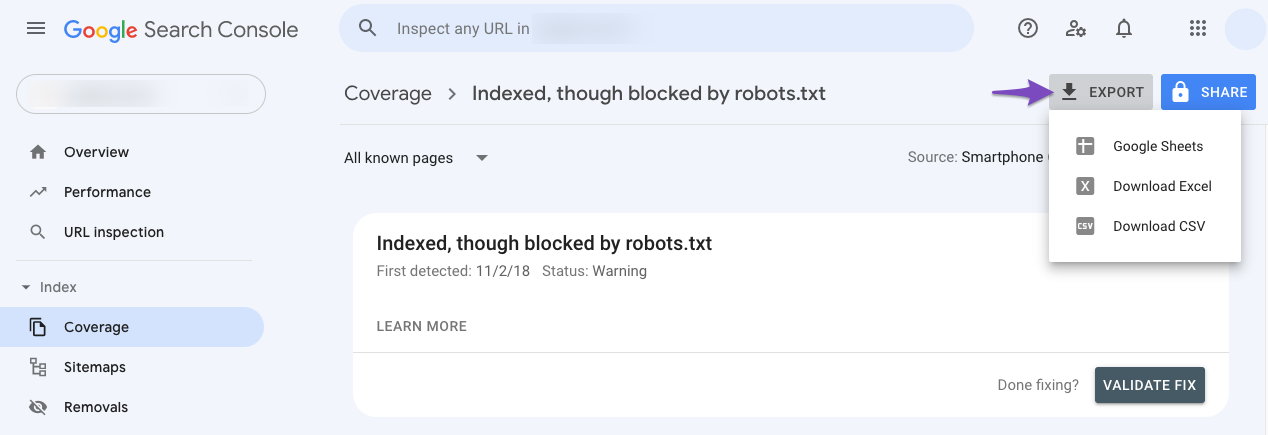

3.1 Open robots.txt Tester

At first, head over to the robots.txt Tester. If your Google Search Console account is linked with more than one website, then select your website from the list of sites shown in the top right corner. Now Google will load your website’s robots.txt file. Here is how it would look like.

3.2 Enter the URL of Your Site

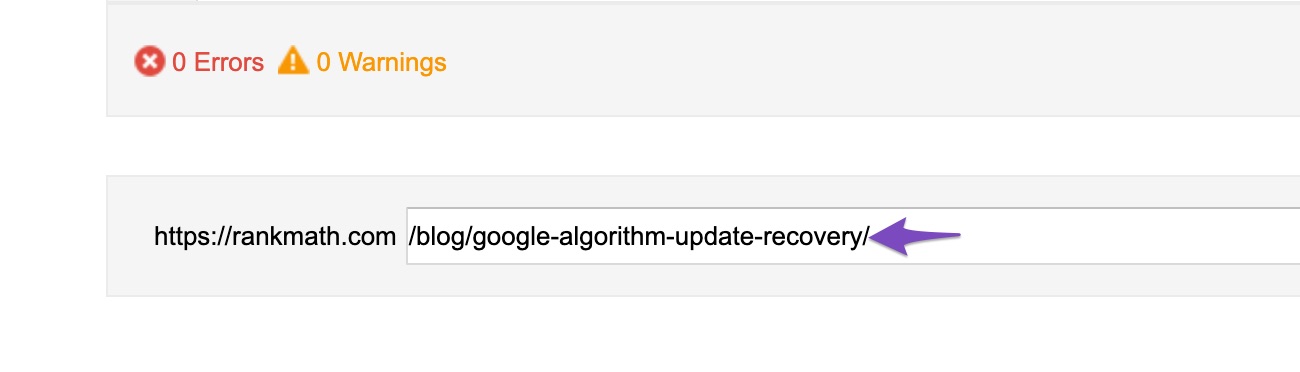

At the bottom of the tool, you will find the option to enter a URL from your website for testing. Here you will add a URL from the spreadsheet we’ve downloaded earlier.

3.3 Select the User-Agent

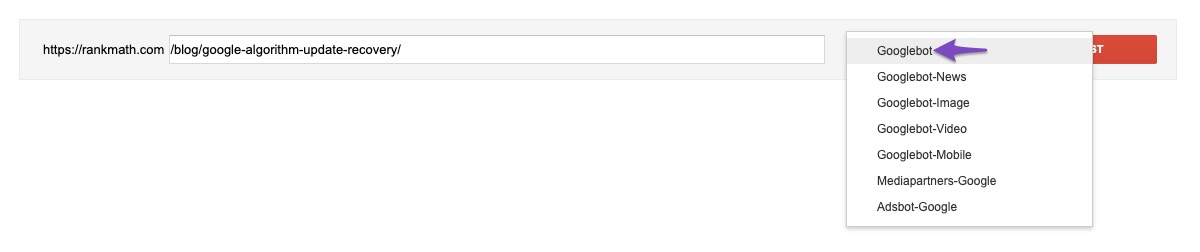

From the drop-down available on the right side of the text box, select the user-agent you want to simulate (Googlebot in our case).

3.4 Validate Robots.txt

Finally, click the Test button.

The crawler would instantly validate if it has access to the URL based on the robots.txt configuration and accordingly the test button would turn out to ACCEPTED or BLOCKED.

The code editor available at the center of the screen will also highlight the rule in your robots.txt, which is blocking access, as shown below.

3.5 Edit & Debug

If the robots.txt Tester finds any rule preventing access, you can try editing the rule right inside the code editor and then run through the test once again.

You can also refer to our dedicated knowledgebase article on robots.txt to understand more about the accepted rules, and it would be helpful in editing the rules here.

If you happen to fix the rule, then it’s great. But please note, this is a debugging tool, and any changes you make here will not be reflected on your website’s robots.txt unless you copy & paste the contents to your website’s robots.txt.

If you face any difficulties in editing robots.txt please contact support.

3.6 Export Robots.txt

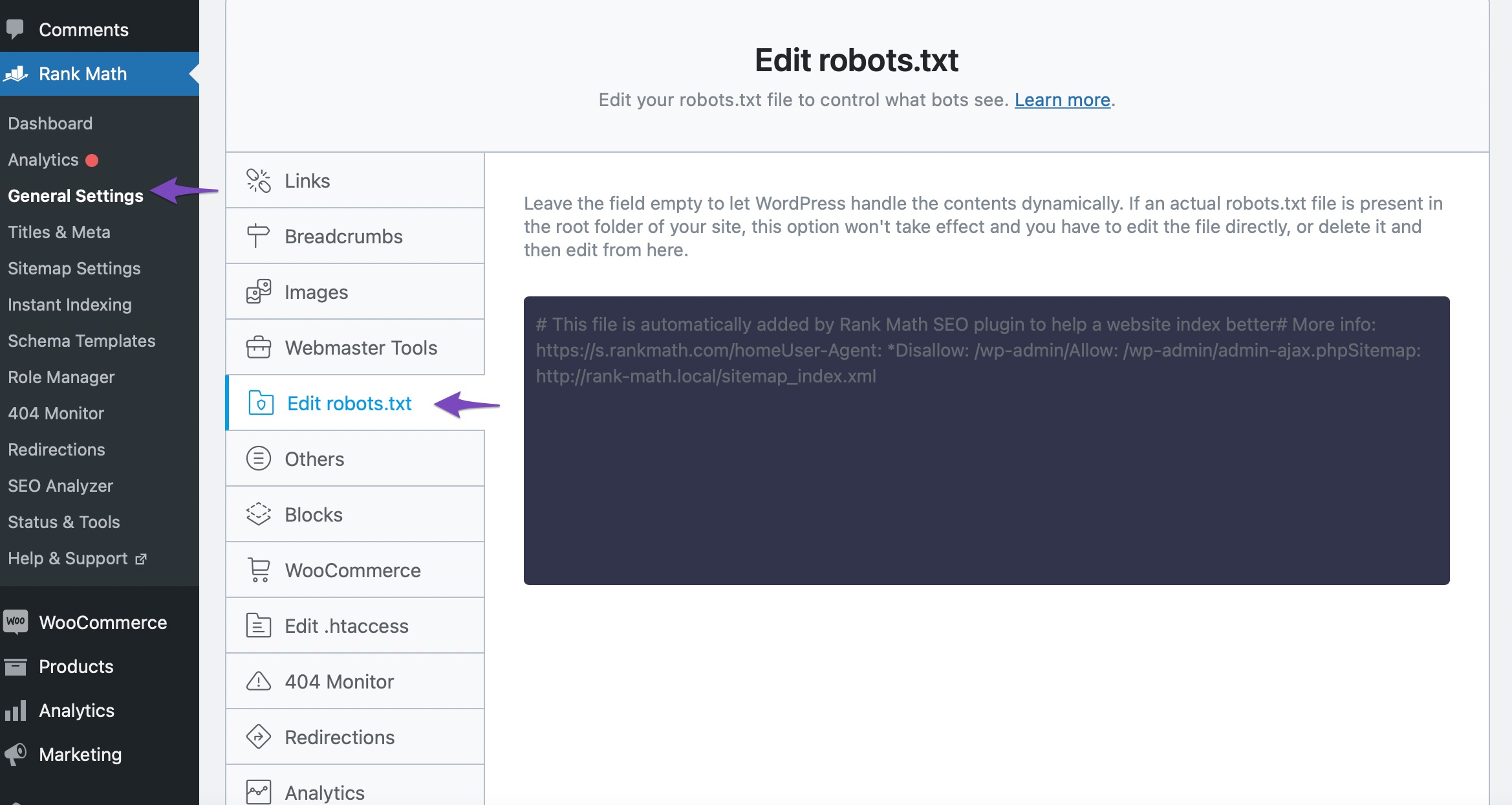

So to add the modified rules in your robots.txt, head over to Rank Math SEO → General Settings → Edit robots.txt inside your WordPress admin area. If this option isn’t available for you, then ensure you’re using the Advanced Mode in Rank Math.

In the code editor that is available in the middle of your screen, paste the code you’ve copied from the robots.txt. Tester and then click the Save Changes button to reflect the changes.

4 Pages Not to Be Indexed

Well, if you determine the page is not supposed to be indexed, but Google has indexed the page, then it could be one of the reasons we’ve discussed below.

4.1 Noindex Pages Blocked Through Robots.txt

When a page should not be indexed in search results, it should be indicated by a Robots Meta directive and not through a robots.txt rule.

A robots.txt file only contains instructions for crawling. Remember, crawling and indexing are two separate processes.

Preventing a page from being crawled ≠ Preventing a page from being indexed

So to prevent a page from getting indexed, you can add a No Index Robots Meta using Rank Math.

But then, if you add No Index Robots Meta and simultaneously block the search engine from crawling these URLs, technically, you’re not allowing Googlebot to crawl & know the page has got a No Index Robots Meta.

Ideally, you should allow Googlebot to crawl through these pages, and based on the No Index Robots Meta, Google will drop the page from the index.

Note: Use robots.txt only for blocking files (like images, PDF, feeds, etc.) where it isn’t possible to add a No Index Robots Meta.

4.2 External Links to Blocked Pages

Pages that you have disallowed through robots.txt might have links from external sites. Then Googlebot will eventually try to index the page.

Since you’ve disallowed the bots from crawling the page, Google would index the page with the limited information available from the linked page.

To resolve this issue, you might consider reaching out to the external site and request to change the link to a more relevant URL on your website.

5 Conclusion — Validate Fix in Google Search Console

Once you’ve fixed the issues with the URLs, head back to the Google Search Console warning and then click the Validate Fix button. Now Google will recrawl these URLs and close the issue if the error is resolved.

And, that’s it! We hope the article helped you fix the error and if you still have absolutely any questions, please feel free to reach our support team directly from here, and we’re always here to help.